AI Ethics in Business

Decision-makers may look to artificial intelligence (AI) and machine learning (ML) technologies, that learn based on training datasets, and make predictions that can a ect business outcomes, to achieve their business goals. However, debates around the potential impacts of such technologies on businesses and society continue. How are decision-makers, and their organization, approaching AI ethics?

One minute insights:

Most are concerned about the potential ethical impacts of artificial intelligence and machine learning (AI/ML) technologies used in business and feel businesses aren’t taking those impacts seriously enough

Most are concerned about the potential ethical impacts of artificial intelligence and machine learning (AI/ML) technologies used in business and feel businesses aren’t taking those impacts seriously enough Policies around AI ethics are not commonplace, though many report that their organization conducts tests for data biases

Policies around AI ethics are not commonplace, though many report that their organization conducts tests for data biases Skills gaps and issues of executive buy-in and leadership are cited as the main challenges to developing AI ethics-focused processes in businesses

Skills gaps and issues of executive buy-in and leadership are cited as the main challenges to developing AI ethics-focused processes in businesses Most believe the CIO should be ultimately responsible for AI ethics within the organization

Most believe the CIO should be ultimately responsible for AI ethics within the organization Decision-makers are concerned about the wider impacts of AI/ML technology on society, identifying a lack of human determinism and weaponization as the top concerns

Decision-makers are concerned about the wider impacts of AI/ML technology on society, identifying a lack of human determinism and weaponization as the top concerns

Most decision-makers are concerned about the impacts of AI/ML technologies on society, and agree that businesses aren’t taking the ethical impacts of such technologies seriously enough

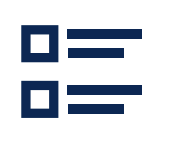

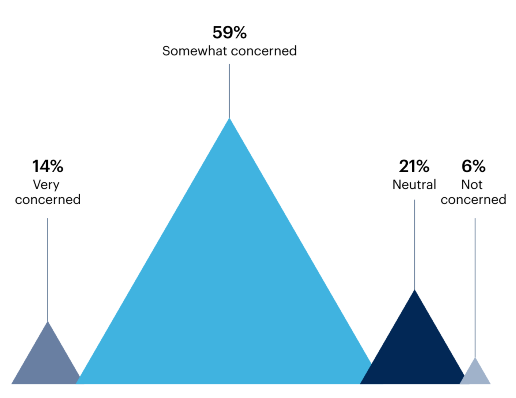

Over three-quarters (78%) of decision-makers are concerned by the potential impacts of the AI/ML technologies used by businesses.

Are you concerned by potential ethical impacts of artificial intelligence (AI) / machine learning (ML) technologies used by businesses?

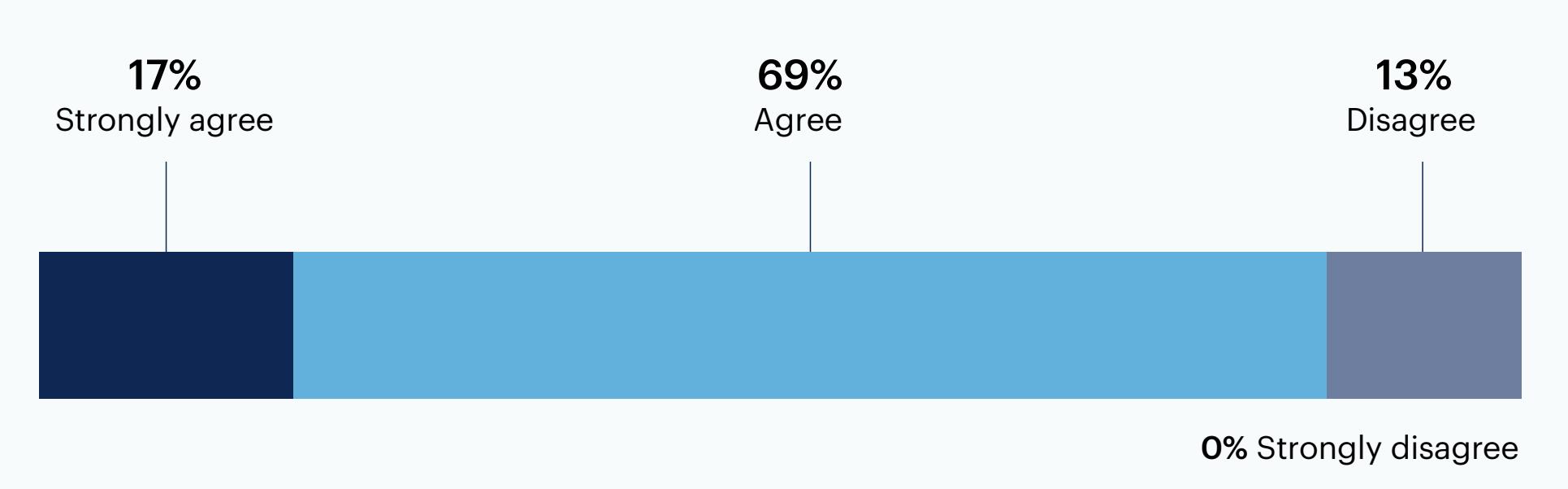

86% agree that businesses aren’t taking the ethical impacts of AI technology on society seriously enough.

To what extent do you agree with the following: “Businesses are not taking the ethical impacts of AI technology on society seriously enough.”

Ethics should not be an afterthought when building an AI/ML solution.

Concerns regarding ethics of AI [di er] a lot from one industry to another and policies should be applied depending on industry.

Beyond testing data for biases, policies and initiatives around AI/ ML ethics aren’t commonplace but most are satisfied with their organization’s approach

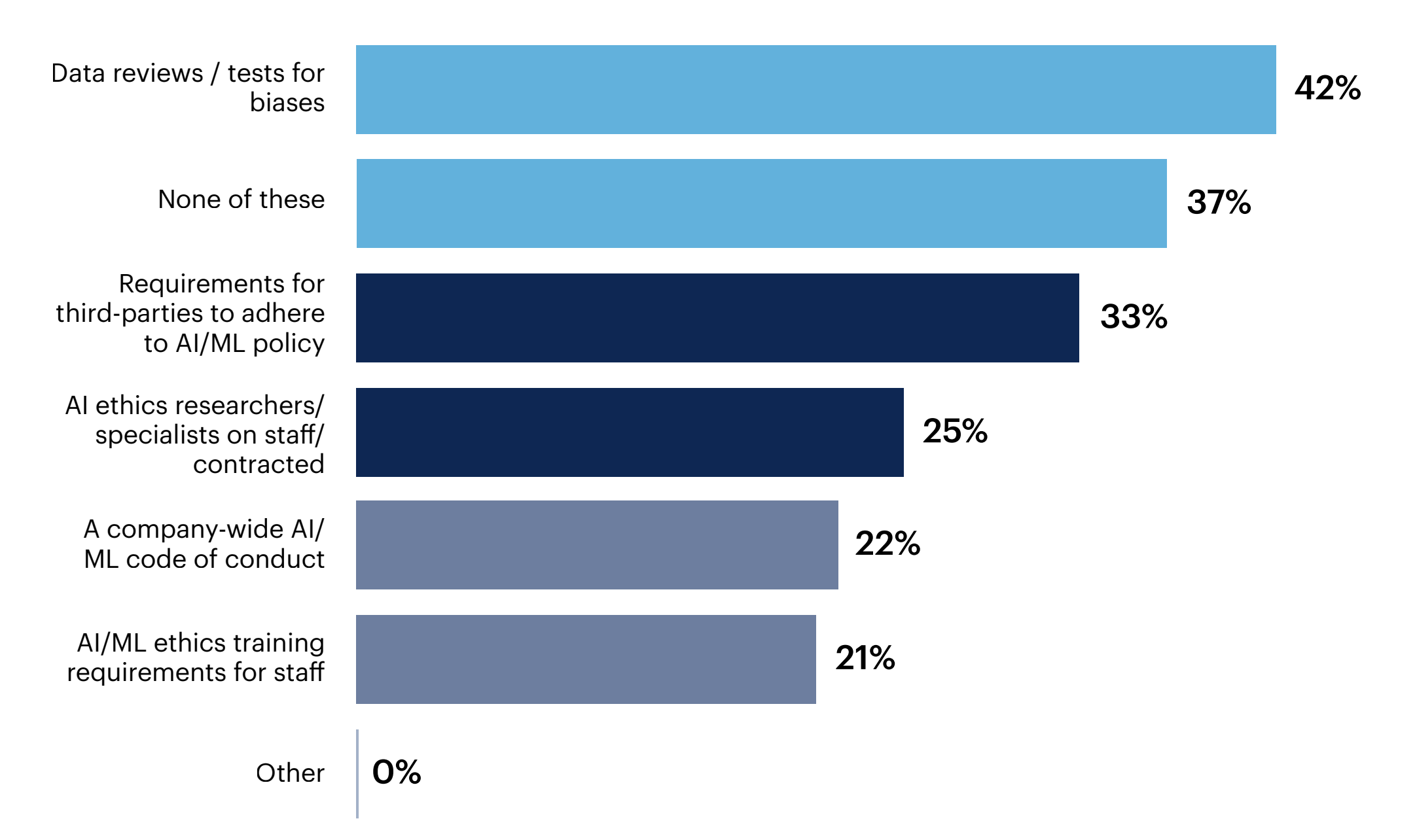

When it comes to policies and initiatives for AI/ML ethics in the organization, many (42%) decision-makers report that their organization has data reviews / tests for biases in place.

However, over two-thirds (37%) don’t have any of the policies or initiatives listed in place.

Does your organization have any of the following in place?

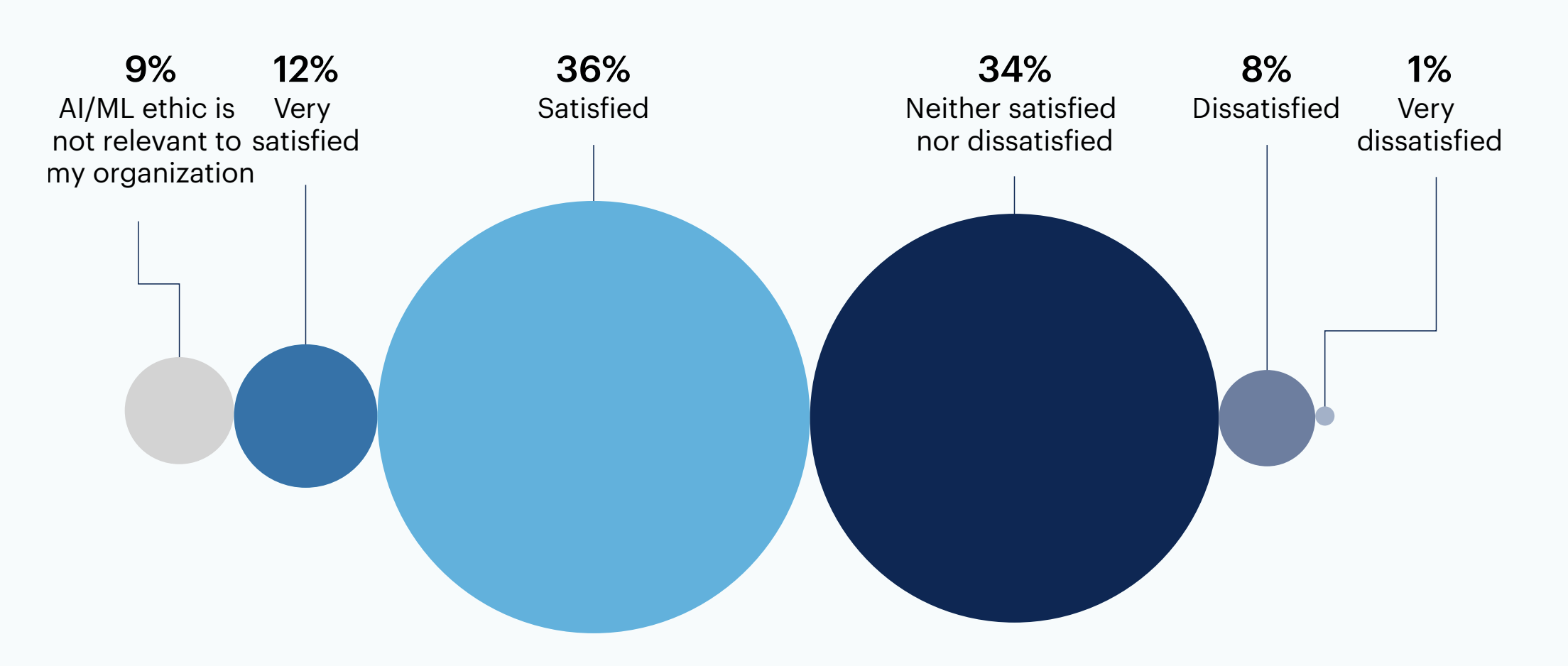

Most decision-makers (48%) are satisfied with their organization’s approach to AI/ML ethics.

How satisfied are you with your organization’s approach to AI/ML ethics?

I don’t have large concerns about my organization. Most strategies have followed a business case.

This is new to my company and is one of the phases in our Data and Analytics strategy. The concepts such as the ethical implications will be a topic within this work stream.

Skills gaps are the most cited challenge for implementing AI ethics processes, as well as executive buy-in and leadership—and the CIO should be ultimately responsible

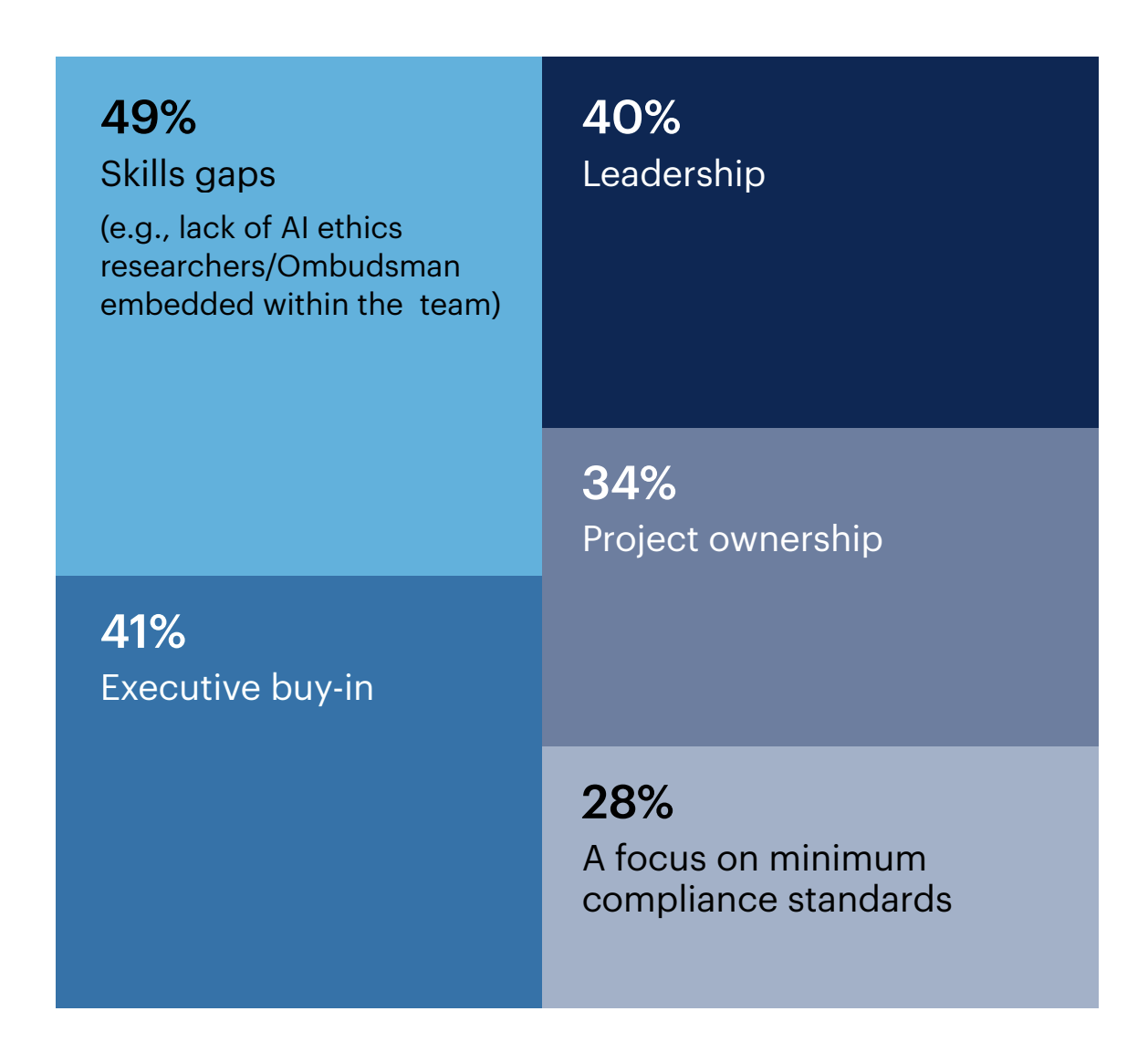

When it comes to challenges to building AI/ML ethical processes within businesses, the top concerns are skills gaps (49%), executive buy-in (41%), and leadership (40%).

Which of the following do you see as the biggest challenges in building AI/ML ethical processes within businesses?

Lack of third-party vendor risk assessments 25%, Engineer buy-in 23%, Lack of consistent geographical governance/regulations 23%, A focus on continuous growth 22%, Lack of data origin clarity 22%, Technological limitations 21%, None of these 3%, Other 0%

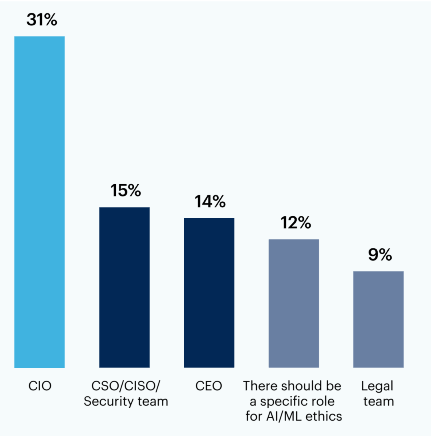

The responsibility for AI/ML ethics within the business should ultimately fall on the CIO, according to 31% of decision-makers.

Where should the responsibility/ownership of AI/ML technology ethics ultimately fall within businesses?

Individual employees (e.g., engineers/data scientists) 7%, One specific role/individual shouldn’t have sole responsibility 7%, Not sure 4%, Other 0%

Lack of transparency of AI tools is a top concern.

Most of [the] tech guys are not even aware of the subject. Most of [the] executives close their eyes not to lose potential projects. As a fellow leader of [the] AI team, it is hard to be in between.

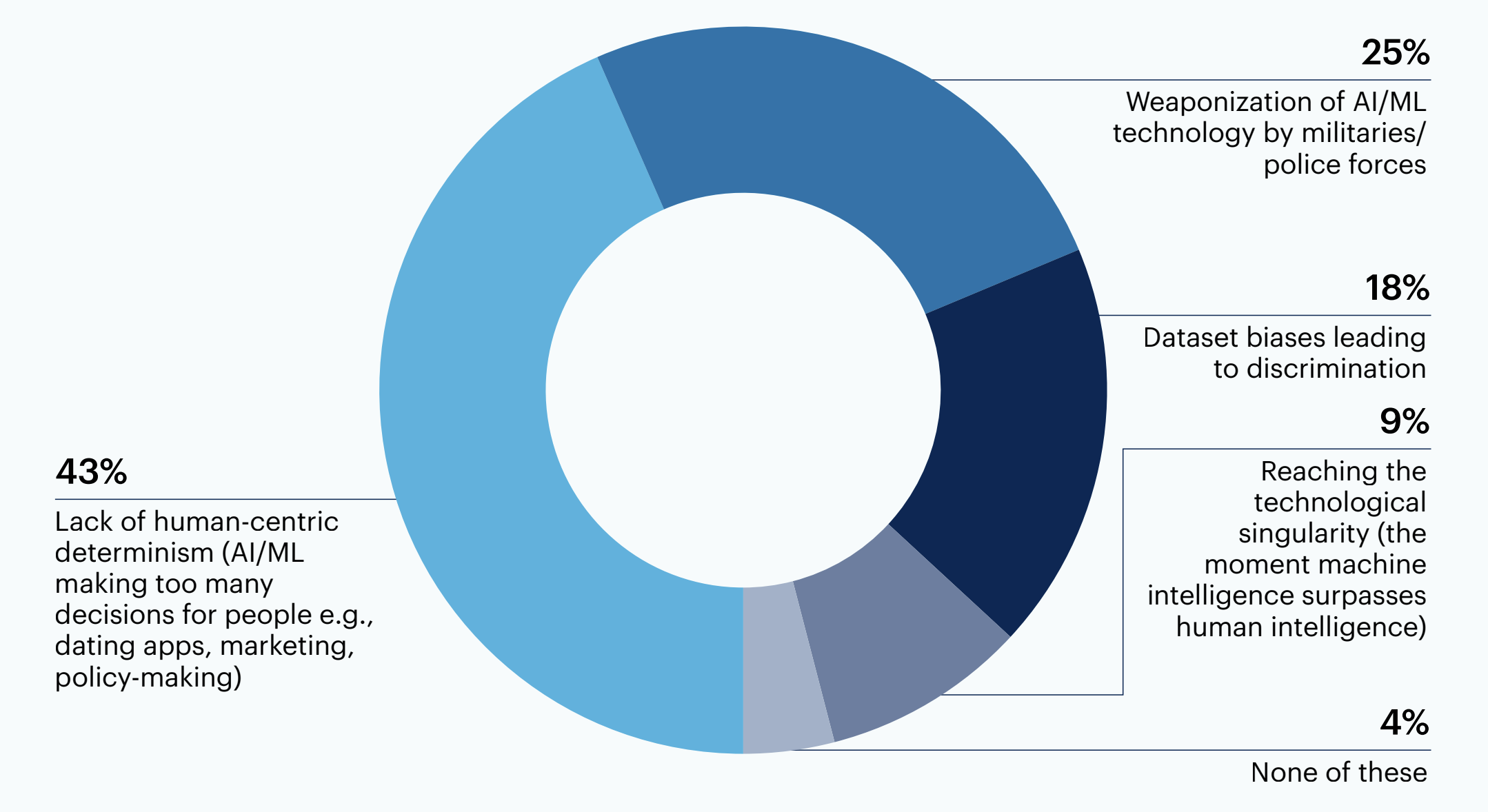

Most decision-makers are concerned about the wider impacts of AI/ML tech on society, fearing a lack of human-centric determinism and possible weaponization

When it comes to the wider impacts of AI/ML technology on society, almost three-quarters (73%) are at least somewhat concerned.

Generally speaking, how concerned are you about the impact of AI/ML technology on society?

The top concern for the impact of AI/ML technology on society is the lack of human-centric determinism (43%), followed by the weaponization of AI/ML technology (25%).

What concerns you most about the impact of AI/ML technology on society?

0% Other

AI/ML will be embedded into our lives progressively whether we want it or not. There is a need for collective consciousness of enterprises and states on how they will use [AI/ML] technology.

AI/ML can be a great business tool for assisting in making business decisions. It should not be the end all for making the decision. The human aspect of business makes it hard for AI/ML to sometimes make the correct decisions or ethical decisions.

AI is biased because it is designed by humans who are biased. It can also be easily abused and leveraged in ways that are not valuable in the long run. Someone should always be asking the question - how can this be misused or abused by some who can tinker with the data, misinterpret the data, or intentionally misuse the data for their own gain.

AI/ML work needs to include researchers and engineers from underrepresented groups.

Any legal guidelines might help, like GDPR.

Want more insights like this from leaders like yourself?

Click here to explore the revamped, retooled and reimagined Gartner Peer Community. You'll get access to synthesized insights and engaging discussions from a community of your peers.

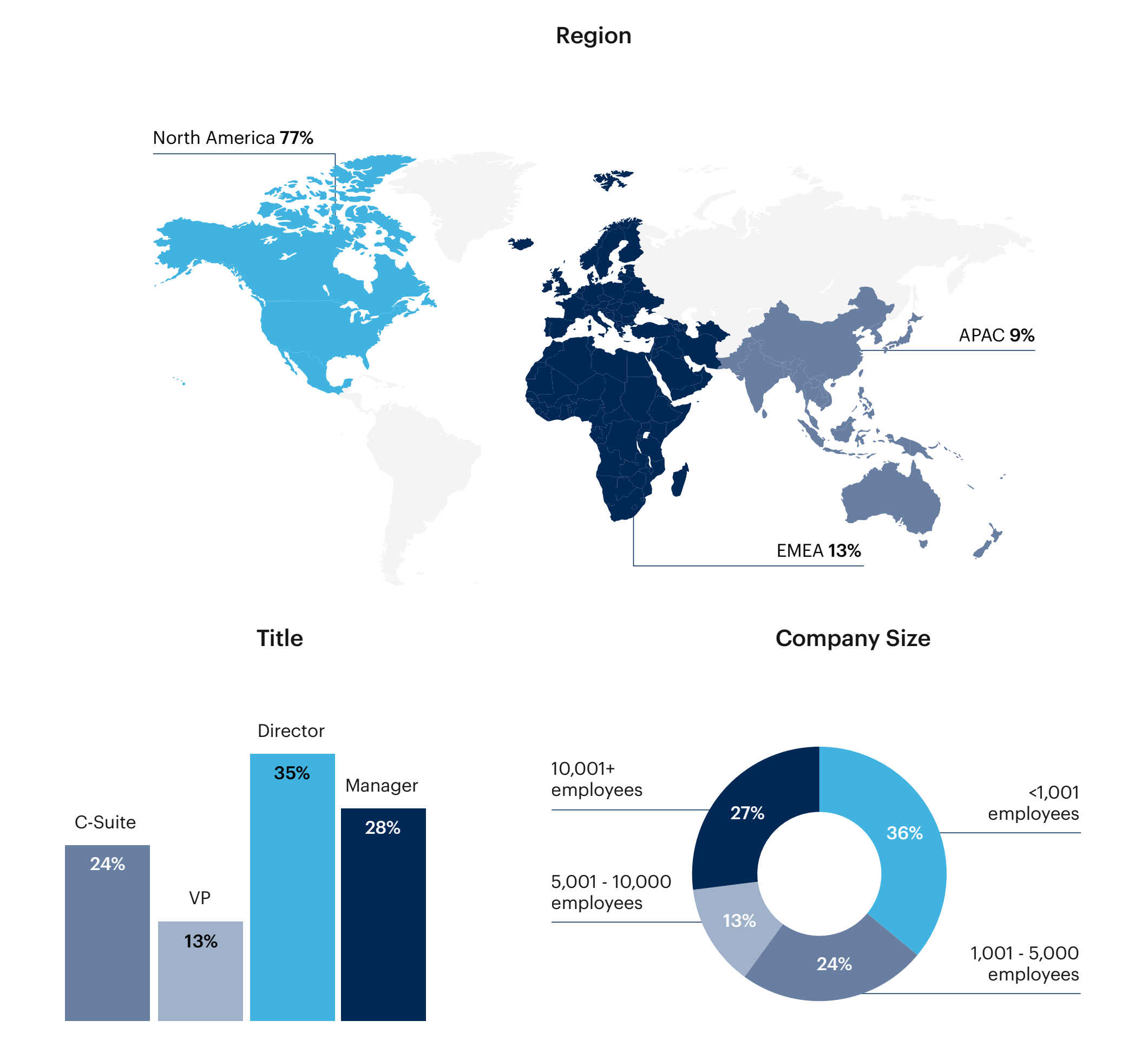

Respondent breakdown